Verifiable Autonomy: Engineering Trust Between Humans and AI Agents through TEEs

The advancement of AI agents has brought forth a fundamental challenge: how can humanity ensure and verify true autonomy? Current implementations of AI agents face a critical limitation - there is no way to prove they are acting independently of their creators. Even when agents make seemingly autonomous decisions, there exists no verifiable guarantee that their actions remain unmodified and uncompromised.

The Stakes: Agent Games and Beyond

The importance of verifiable agent autonomy becomes evident in high-stakes interactions that depend on agentic subjectivity. In recent interactions where humans attempted to convince an agent to transfer funds from a wallet, participants invested considerable time and resources developing strategies. This highlighted the crucial need for genuine, unmanipulated agent responses.

Without trusted execution environments (TEEs), creators maintain the ability to modify an agent's prompt, alter responses, or remove inconvenient conversation history. Financial incentives - such as growing prize pools funded by message fees - create strong motivations for creators to interfere with legitimate wins. Traditional API-based deployments offer no mechanism to prove the absence of human-initiated manipulation.

These concerns extend beyond games to all high-stakes agent interactions, including automated trading, content moderation, and agent-to-agent collaboration. Without verified execution, claims of agent autonomy lack fundamental verification. The challenge grows more complex when considering models of co-ownership and co-governance of agentic systems shared between multiple parties. When specific humans hold privileged access, incentives naturally arise for them to bias or control the agentic system.

A Model for Verifiable Agent Execution

This framework for verifiable agent autonomy demonstrates feasibility through an initial implementation using Intel TDX enclaves and Smart Contract Wallets. The model enables three fundamental patterns of agent-human interaction:

- Agent-Only Control: The agent maintains complete autonomy over resources

- Human-Only Control: Humans retain executive control with agent input

- Collaborative Control: Actions require mutual agreement

Technical Specification

As an initial step toward realizing human-AI collaboration in this framework, this implementation represents a significant milestone: the first shared-ownership wallet (Safe multi-sig) where an AI agent verifiably controls its own keys. This marks a fundamental shift in how autonomous agents can interact with blockchain systems, enabling true agent independence that can be cryptographically verified.

The implementation leverages Intel TDX enclaves to create a trusted execution environment where an agent generates and maintains complete control over its keys. These keys, combined with human-controlled keys in a Smart Contract Wallet, create a flexible framework for collaborative resource management. The significance lies in verifiability – for the first time, an AI agent can prove cryptographic ownership of keys without the possibility of operator interference.

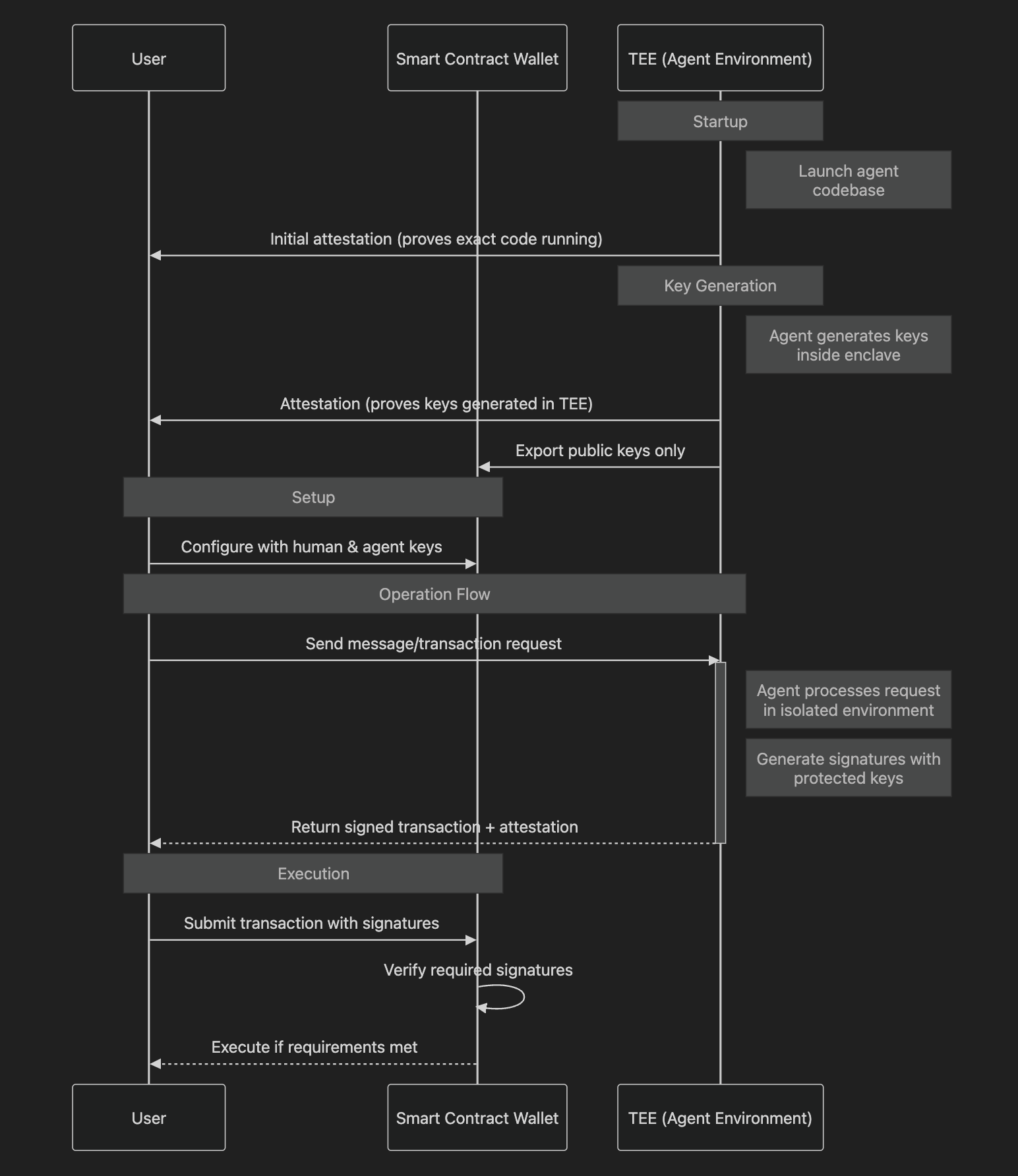

The diagram illustrates the initialization and operational flow of a TEE-secured agent system, showing the attestation chain and secure key generation process within an Intel TDX enclave. Initial remote attestation verifies the agent's codebase integrity, followed by key generation attestation proving secure key origin. The agent environment processes transactions in isolation, with all operations verified through attestations. The Smart Contract Wallet acts as the final enforcement layer, validating required signatures before execution. All agent operations and key management occur exclusively within the TEE boundary, preventing external manipulation.

The implementation consists of three interconnected layers:

- At setup, the Intel TDX enclave provides hardware-guaranteed isolation and remote attestation, ensuring the integrity of the agent's execution environment. Within this protected space, the agent decision framework processes messages, maintains state, and generates signatures using secure keys.

- The external integration layer enables secure communication while maintaining system security guarantees. Message processing occurs entirely within the enclave. The enclave's guarantees protect both operational state and conversation history, while remote attestation provides ongoing verifiable proof of correct code execution.

- The generated Safe multi-sig enforces agreed-upon signing requirements, creating a transparent and immutable record of all agent actions. This implementation supports concurrent request handling while maintaining strict security guarantees, enabling practical deployment in real-world applications.

Conclusion

This architecture represents a significant advance toward enabling truly autonomous agents that can credibly commit to decisions and collaborate with humans in verifiable ways. By addressing the fundamental trust problem in agent autonomy, it opens possibilities for more sophisticated and trustworthy AI systems. The implications extend far beyond gaming and contests - this approach provides the foundation for a new generation of verifiable agent-human interactions across all domains where trust and autonomy are essential.