Reality Oracles: Verifiable Data as the Catalyst for AI Coordination

Previous work has demonstrated autonomous private key ownership within trusted execution environments (TEEs). A fundamental challenge in agentic system development is access to reliable, verifiable information about the external world. Current AI implementations face a critical limitation in proving the authenticity and integrity of consumed data. Even when accessing seemingly authoritative sources, no verifiable guarantee exists that information remains unmodified and uncompromised. Roots of trust need to be made explicit.

The Stakes: Data Integrity and Agent Trust

The importance of verifiable data becomes evident in high-stakes automated decision-making. Regular APIs and websites don't provide secure, verifiable data feeds. While some data sources like price oracles and weather services provide cryptographically signed data for direct verification, most web content lacks built-in source verification. A TEE-based mechanism can prove the integrity of data flowing from standard web sources to AI systems. The utility extends beyond simple data consumption to all high-stakes automated interactions, including trading, content moderation, and agent-to-agent collaboration.

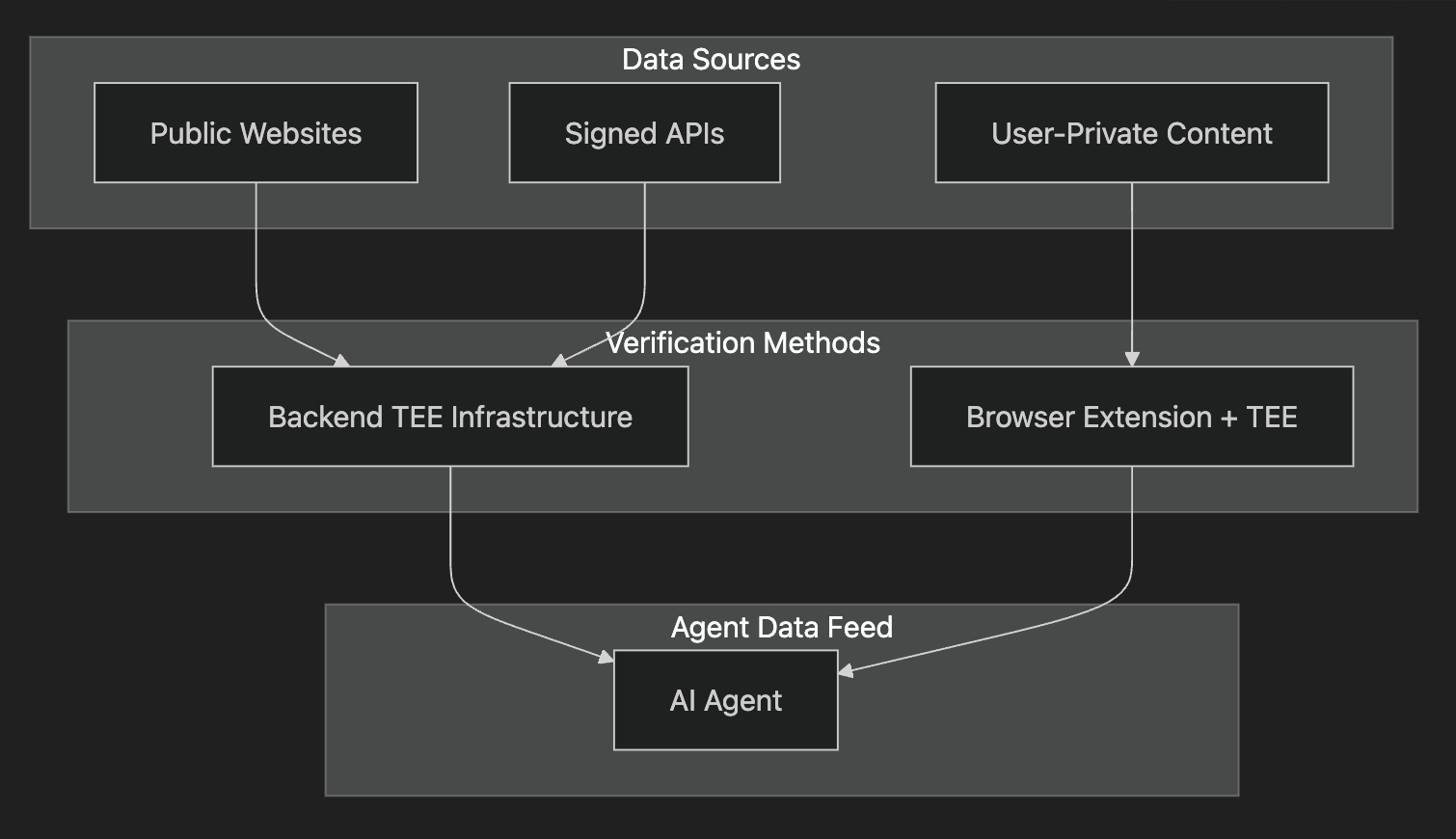

Dual Paths to Verifiable Data

Direct Backend Verification

For public websites and scenarios with designated credentials, a direct backend TEE infrastructure handles data attestation without requiring user intervention. This enables automated verification of public data like market prices, weather information, and news feeds, as well as API-authenticated data where agentic systems maintain dedicated access credentials.

User-Mediated Verification

The more nuanced challenge lies in verifying private, user-authenticated content. A soon-to-launch browser extension will enable humans to selectively share verified information from authenticated sessions with AI agents. Through this mechanism, any human can attest to specific information from private webpages while maintaining control over sensitive data. This provides a foundation for fine-grained access control in agent interactions.

This architecture enables powerful use cases: selective disclosure of information from private webpages, age requirement verification, private message authentication without full content sharing, and timestamped web content interactions. The system enables contributions to verifiable information access while maintaining fine-grained control over data sharing.

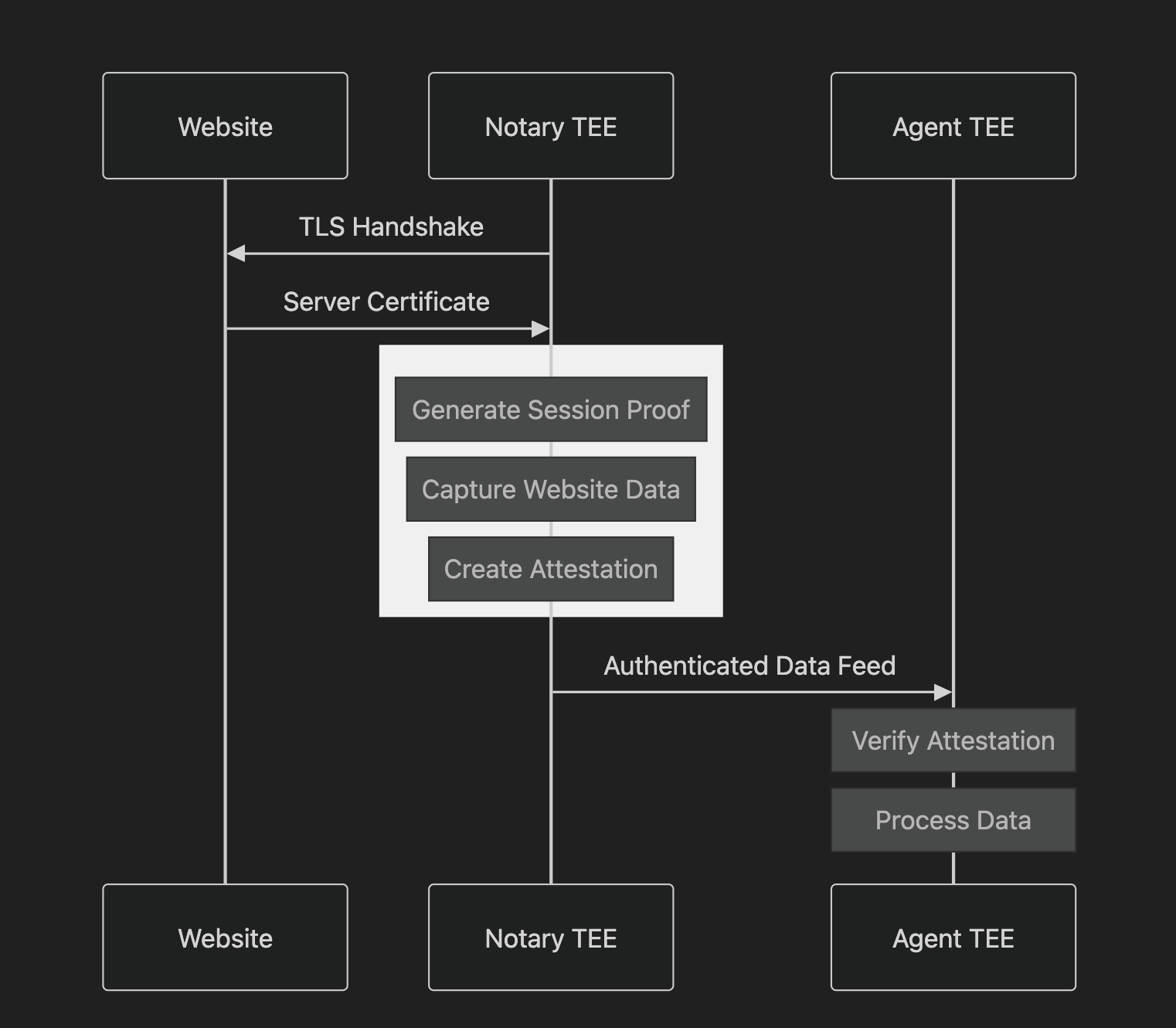

Core Technical Architecture

At the heart of both verification approaches lies a common TEE-based attestation mechanism. Secure enclaves act as trusted intermediaries, observing web content directly and providing signed attestations. This enables verifiable data receipt from any website without requiring changes to existing infrastructure. The following sequence diagram illustrates the detailed interaction flow between components:

Each attestation includes four critical components:

- The content hash provides a unique cryptographic fingerprint of the observed data, ensuring detection and invalidation of any modifications.

- The timestamp establishes a verifiable record of observation timing, creating an immutable timeline for decision-making.

- The TEE's remote attestation proves observation within a secure enclave, providing hardware-level guarantees of data and codebase integrity.

- The TLS session proof comes from the TEE running a codebase that validates the secure connection to the source website, completing the chain of trust from source to destination (a powerful agentic system).

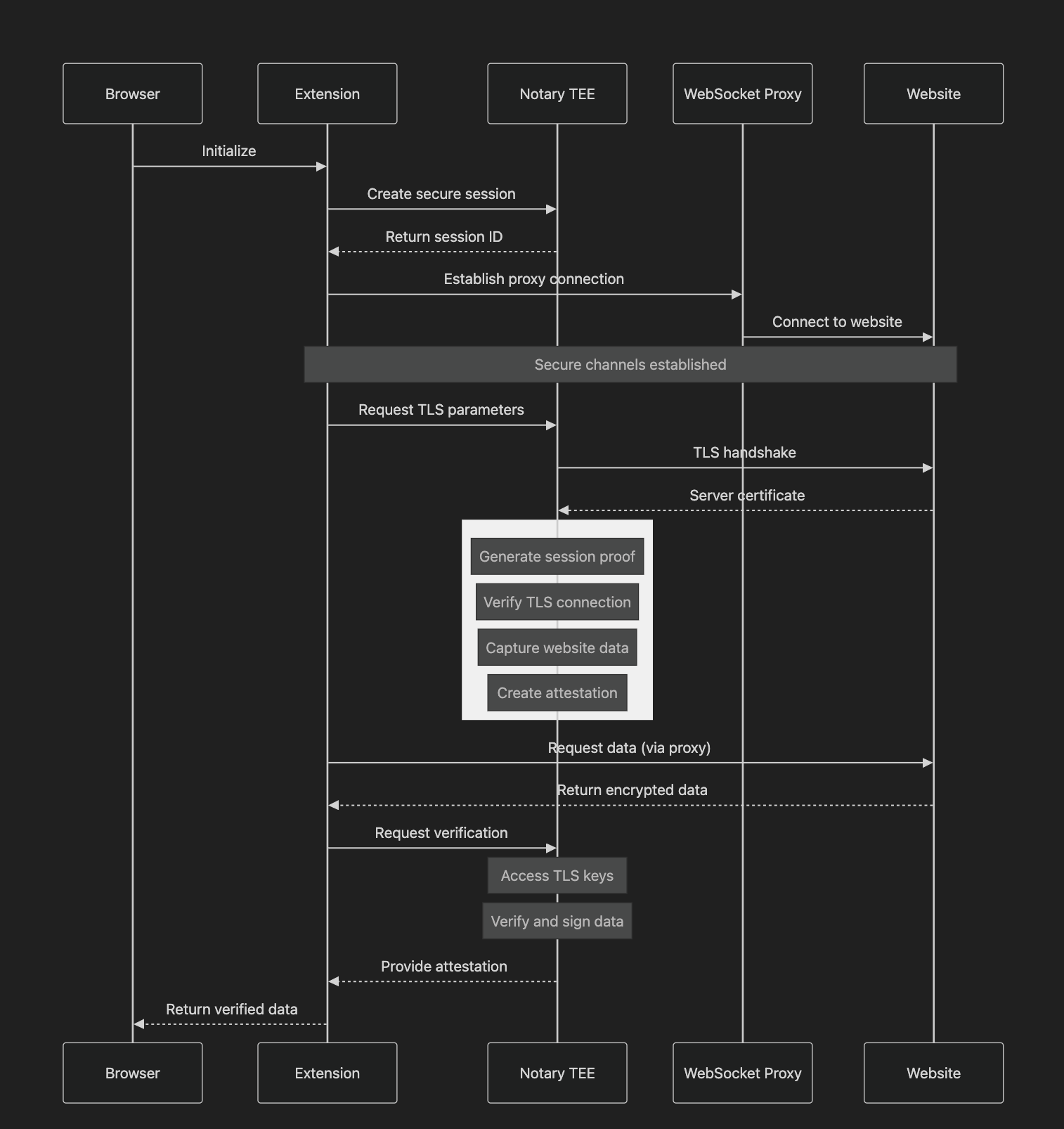

System Components

The implementation consists of three interconnected components:

Browser Environment. The browser extension serves as the primary gateway where humans and their personal agents initiate secure browsing sessions and receive attestations about web content. It manages cryptographic operations and orchestrates all communication channels, bridging the browser, Notary, and target servers.

Secure Infrastructure. A Notary is simply a TEE acting as a secure proxy for TLS connections. The Notary service provides hardware-level isolation while managing sessions and producing trusted attestations. The communication infrastructure utilizes two distinct WebSocket connections: one dedicated to Notary communications and another for proxied connections to target servers.

Operational Flow. The initialization process begins with WASM extension activation and secure session establishment. The TLS handshake workflow establishes verified encrypted streams with target servers. Request processing follows a carefully optimized sequence, achieving efficient attestation generation. Attestation generation can be triggered at any point, with the Notary handling key access, data decryption, and signature production.

Conclusion

This architecture represents a step towards enabling truly verifiable web data that agents can utilize in their decision-making processes. Addressing the fundamental trust problem in web data consumption opens possibilities for more sophisticated and reliable agentic systems. This framework builds on foundational TLS attestation protocols like TownCrier and TLSNotary. The upcoming browser extension delivers the fastest attestation speeds among all public implementations of TLS attestations, marking a crucial step toward verifiable machine-human interactions across all domains where trust and data integrity are essential.